Automated Massacres: How 'Israel' Uses AI in its Aggression on Gaza

Using AI in the war on Gaza has raised concerns and questions among human rights activists with the increasing number of civilian casualties.

The use of artificial intelligence technologies is supposed to provide sufficient accuracy, meaning a reduction in errors, especially when used to achieve security and military objectives.

However, the Israeli Occupation uses this technology to commit further crimes in Gaza. For example, if it identifies a Palestinian fighter it wants to target among a thousand civilians, it employs artificial intelligence to kill them. It does not hesitate to annihilate them all, disregarding the number of lives lost.

Although this technology enables the Israeli Occupation to accurately monitor the numbers of those to be killed when targeting a specific residential building, neighborhood, or even a hospital, this does not deter them from striking. They deliberately target and kill all these numbers.

Since the beginning of the aggression on Gaza, the Israeli Occupation has sought to experiment with artificial intelligence systems, even if they are not accurate.

This has raised concerns and questions among human rights activists about the possibility of Israeli "random use" of this technology in war, especially with the increasing number of civilian casualties.

In 2019, the Israeli army established a new center aimed at using artificial intelligence "to accelerate the process of generating targets."

Former Chief of Staff of the Israeli army Aviv Kohavi stated in an interview with Yedioth Ahronoth on June 23, 2023, that the administrative department that identifies targets is a unit comprising hundreds of officers and soldiers, relying on artificial intelligence capabilities.

Kohavi summarized the mission of this military unit as a machine that, with the help of artificial intelligence, can process a lot of data better and faster than any human and translate it into attack targets.

He revealed that when this machine was first used in the 2021 war with Gaza, it generated 100 new targets every day, whereas before, there were 50 targets annually.

Mass Assassinations

One aspect of the "war crimes" committed by the Israeli Occupation army in Gaza, as revealed by a joint investigation by +972 magazine and the Local Call website on November 30, is that the Israeli army uses artificial intelligence programs to determine who resides in specific buildings, yet deliberately bombs them and kills hundreds of civilians inside.

The investigation, titled 'A mass assassination factory': Inside Israel's calculated bombing of Gaza, confirmed that the Israeli mass killing of Gaza residents is not random but deliberate and systematic.

It was confirmed that the Israeli army deliberately expands the targeting to objectives that are not military in nature in Gaza, including public buildings and infrastructure, in order to inflict damage on the Palestinian civilian community and "create shock" to pressure civilians against Hamas.

The magazine quoted an Israeli military official as saying, "When a 3-year-old girl is killed in a home in Gaza, it's because someone in the army decided it wasn't a big deal for her to be killed — that it was a price worth paying in order to hit [another] target."

According to the investigation, another reason for the large number of targets and the extensive harm to civilian life in Gaza is the widespread use of a system called "haBsora" (The Gospel), which is largely built on artificial intelligence and can "generate" targets almost automatically at a rate that far exceeds what was previously possible. This AI system, as described by a former intelligence officer, essentially facilitates a "mass assassination factory."

For this reason, a former Israeli intelligence officer described this artificial intelligence system as essentially facilitating the creation of a mass assassination factory, as the goal is to kill as many Hamas activists as possible and greatly harm Palestinian civilians.

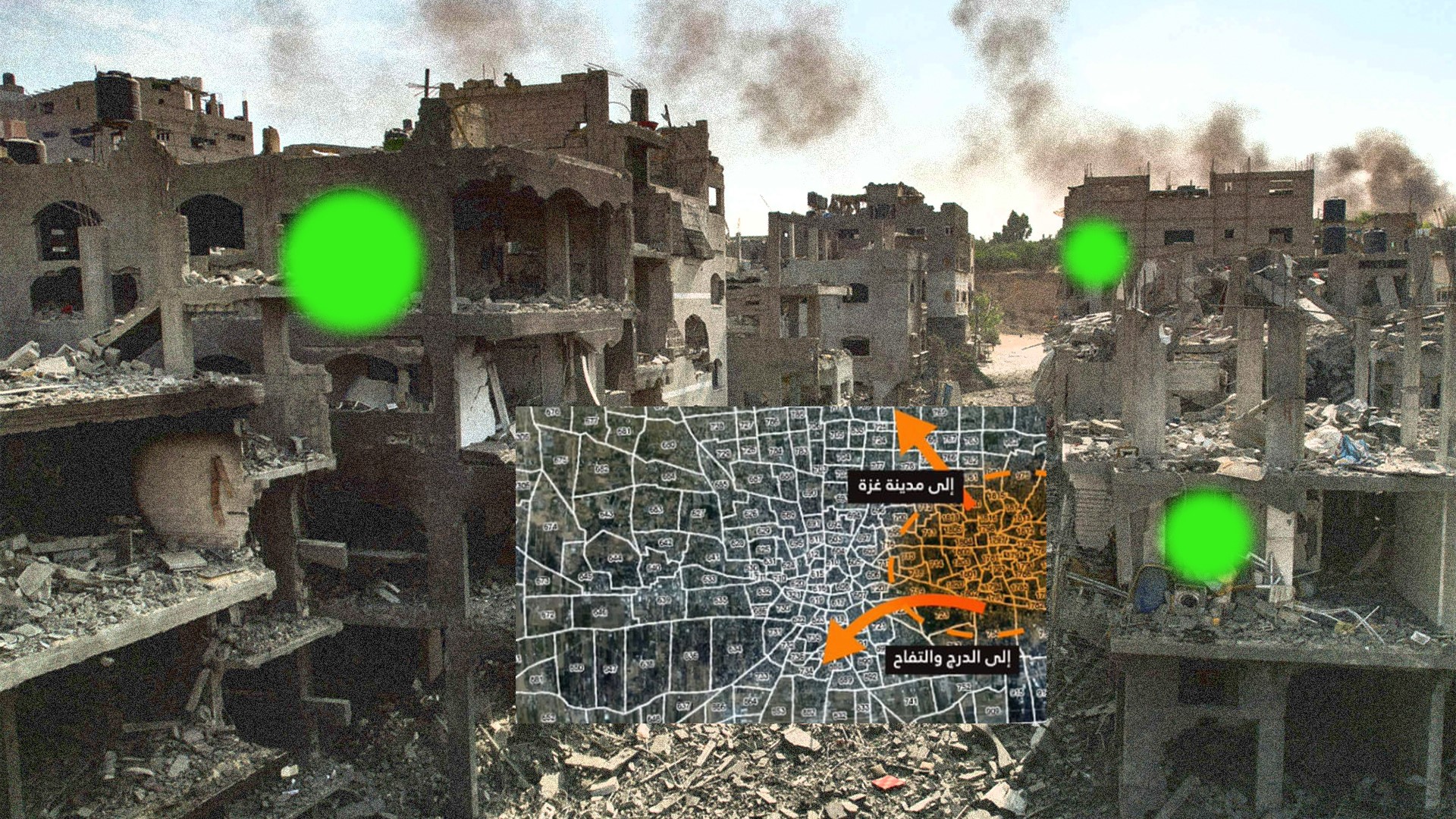

The result of these Zionist policies using this artificial intelligence program was a devastating loss of human lives in Gaza, where over 300 families lost 10 or more members in Israeli airstrikes during October and November 2023 alone.

According to the Israeli army, in the first five days of the aggression, it dropped 6,000 bombs on Gaza, totaling approximately 4,000 tons in weight, and destroyed entire neighborhoods.

For example, on October 25, 2023, the Israeli Occupation deliberately bombed the 12-story residential building al-Taj in Gaza, resulting in the deaths of families living inside without prior warning, burying around 120 people under the rubble of their apartments.

According to intelligence sources, the artificial intelligence system generates, among other things, automatic recommendations to attack private homes where individuals suspected of being Hamas or Islamic Jihad activists reside and carry out mass assassinations.

The danger of this system is that it operates like a factory (of killing), and there is no time to delve into the target, according to an Israeli source for the magazine.

On October 11, 2023, a senior military official told The Jerusalem Post about the Israeli "target bank" that thanks to army artificial intelligence systems, new targets were created faster than Israeli air force attacks!

These automated artificial systems greatly facilitated the work of Israeli intelligence officers in making decisions during military operations, including accounting for potential losses they do not care about and classify as "collateral damage."

'Artificial Stupidity'

Because this technology developed by "Israel," labeled as "artificial intelligence," in its aggression against Gaza, generates targets indiscriminately and results in the killing of thousands of civilians, it has raised questions and concerns among human rights activists and groups, assuming it is "stupidity" rather than artificial intelligence according to its use by the Israeli Occupation.

"Israel" has been accused of "random use" of this technology in warfare, as indicated by a Politico report on March 3, 2024.

Rights groups affirmed that the rapid targeting system unique to this technology is what allowed "Israel" to bomb large parts of Gaza.

In its airstrikes on Gaza, the Israeli army relied on a system supported by artificial intelligence called the Gospel to assist in identifying targets, including schools, relief organization offices, places of worship, and medical facilities, according to the Politico report.

The Palestinian digital rights group, 7amleh, in a recent paper, stated that the use of automated weapons in the war "poses the most horrific threat to Palestinians."

Due to the artificial intelligence technology that increased the casualties of the aggression, the oldest and largest human rights organization in "Israel," the Association for Civil Rights, submitted a request to the legal department of the Israeli Occupation army in December 2023, demanding more transparency regarding the use of automated targeting systems in Gaza.

Heidy Khlaaf, the engineering director of Trail of Bits, a cybersecurity company based in the United Kingdom, told Politico that considering the high error rates in artificial intelligence systems, relying on automated targeting in warfare will result in inaccurate and biased results, becoming closer to random targeting.

She said that the use of automated weapons in warfare poses the most horrific threat to Palestinians.

Politico reported that pressure for more answers about Israeli Occupation's artificial intelligence warfare would echo in the United States, which supplies "Israel" with this technology.

It also poses more obstacles to American lawmakers who seek to use artificial intelligence in future battlefields.

Politico believes that this accountability may force Washington to confront some uncomfortable questions about the extent to which it allows its ally to escape from the dilemma of using warfare reliant on artificial intelligence.

In December 2023, more than 150 countries endorsed a United Nations resolution speaking of serious challenges and concerns in the field of new military technologies, including artificial intelligence and autonomous weapon systems.

Killing Everyone

The new development in the Israeli Occupation's use of artificial intelligence technology in war is the confirmation by Israeli intelligence officers, military officials, and sources to The New York Times on March 27, 2024, that "Israel" has begun using the facial recognition program.

They confirmed that the Israeli Occupation army is collecting facial images of Palestinians and indexing them and can identify individuals within seconds, with the purpose of killing those they desire.

Facial images are collected and stored without the knowledge or consent of Palestinian residents, and then specific individuals are targeted with the intent to kill everyone in the vicinity, and they may use this system to attempt to arrest Palestinians.

Israeli military officials told the American newspaper that this technology was initially used in Gaza to search for Israelis who were captured by Hamas on October 7th as prisoners, but afterward, it began to be used extensively to identify any individuals with connections to Palestinian resistance.

The New York Times pointed out that facial recognition technologies around the world, supported by artificial intelligence systems, are becoming more widespread, with some countries using them to facilitate travel and mobility, while Russia has used them against minorities and to suppress opposition, and recently, Israeli Occupation's use in warfare has emerged.

On November 19, 2023, Palestinian poet Mosab Abu Toha was surprised when he was walking amidst a crowd of people through a military checkpoint on the highway, with Occupation soldiers calling him by name and arresting him for questioning as part of face recognition operations.

The newspaper quoted three officials in Israeli intelligence as saying that Abu Toha was identified through cameras using facial recognition artificial intelligence technology, which allows the identification of individuals and whether they are on the Israeli wanted list or not.

However, an Israeli officer admitted to the American newspaper that sometimes this technology mistakenly classifies civilians as wanted Hamas fighters.

The facial recognition program is managed by the Israeli Military Intelligence Unit 8200 and Electronic Intelligence Unit.

They use technology developed by the Israeli company Corsight, based on a vast archive of images captured by drones and other images found through Google, according to Israeli sources speaking to The New York Times.

Previously, the international human rights organization Amnesty International documented Israeli Occupation's use of facial recognition technology against Palestinians in the West Bank in 2023, calling it The Red Wolf, a constantly growing surveillance network solidifying the Israeli government's control over Palestinians.

The American newspaper said that facial recognition technologies around the world supported by artificial intelligence systems are becoming more widespread and are being used by some countries for repression, confirming fears of the Israeli Occupation's misuse of this program.

There are other uses of artificial intelligence in warfare by the Israeli Occupation army, where the Israeli army used for the first time a technology of AI-enhanced targeting scopes developed by Smart Shooter company, equipped with weapons such as rifles and machine guns.

Another technology is the use of drones capable of launching nets on other drones to disable them.

The Israeli army also resorted to drones using artificial intelligence technologies to map tunnels under Gaza, which Western estimates indicate extend over a distance of more than 500 kilometers, and some of these drones are capable of monitoring humans and working underground.